While looking at performance optimizations for a rails project, I noticed these lines in my debug console:

ActsAsTaggableOn::Tag Load (0.5ms) SELECT "tags".* FROM "tags" INNER JOIN "taggings" ON "tags"."id" = "taggings"."tag_id" WHERE "taggings"."taggable_id" = $1 AND "taggings"."taggable_type" = $2 AND (taggings.context = ('tags')) [["taggable_id", 103], ["taggable_type", "Prediction"]]

This makes sense, my project is using acts-as-taggable-on to tag models. However, our tagging needs are quite simple, and since we are using postgres, I wondered whether using postgres array types might be more efficient. To get a feel for the basic concept, see 41 studio’s writeup.

However, before going through all the trouble, I’d like to see if the performance gains are appreciable or not. Using rails benchmark functionality, we can do this pretty easily.

Full source code is available at https://github.com/adamnengland/rails-tag-bench, or follow along for the step by step for the full experience.

Getting Started

You’ll need

- Rails 4.0.2

- Ruby 2.0.0

- Postgres.app on OS X – though you can certainly modify this to work with any postgres install

Create a new Rails project

rails new rails-tag-bench

cd rails-tag-bench

Open gemfile and add

gem ‘pg’, ‘0.17.1’

(I had to do this first: gem install pg — –with-pg-config=/Applications/Postgres93.app/Contents/MacOS/bin/pg_config)

Then bundle install to get your dependencies.

Replace config/database.yml with

development:

adapter: postgresql

encoding: unicode

database: rails_tag_bench

pool: 5

username: rails_tag_bench

password:

test:

adapter: sqlite3

database: db/test.sqlite3

pool: 5

timeout: 5000

production:

adapter: sqlite3

database: db/production.sqlite3

pool: 5

timeout: 5000

We’ll need a database user, so open up postgres and issue:

create user rails_tag_bench with SUPERUSER;

Okay, lets create the database

rake db:create

In postgres type

\c rails_tag_bench

to confirm that the database is set up.

To do this, we’ll also need acts-as-taggable-on, so update the gemfile

gem ‘acts-as-taggable-on’, ‘2.4.1’

and bundle install

rails g acts_as_taggable_on:migration

rake db:migrate

Lets start with the taggable version:

rails g model ArticleTaggable title:string body:text

rake db:migrate

open the created article_taggable.rb and edit

class ArticleTaggable < ActiveRecord::Base

acts_as_taggable

end

Lets setup the benchmark:

rails g task bench

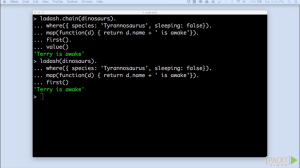

Fill out the body like so

require 'benchmark'

namespace :bench do

task writes: :environment do

Benchmark.bmbm do |x|

x.report("Benchmark 1") do

1_000.times do

ArticleTaggable.create(:title => ('a'..'z').to_a.shuffle[0,8].join, :body => ('a'..'z').to_a.shuffle[0,100].join, :tag_list => ['TAG1'])

end

end

end

end

task reads: :environment do

Benchmark.bmbm do |x|

x.report("Benchmark 1") do

1_000.times do

ArticleTaggable.includes(:tags).find_by_id(Random.new.rand(1000..2000));

end

end

end

end

end

You can run the benchmarks like so:

rake db:reset

rake bench:writes

rake bench:reads

Which should give you output like this:

➜ rails-tag-bench rake bench:writes

Rehearsal -----------------------------------------------

Benchmark 1 8.620000 0.340000 8.960000 ( 10.716852)

-------------------------------------- total: 8.960000sec

user system total real

Benchmark 1 8.540000 0.320000 8.860000 ( 10.543746)

➜ rails-tag-bench rake bench:reads

Rehearsal -----------------------------------------------

Benchmark 1 2.930000 0.160000 3.090000 ( 3.906484)

-------------------------------------- total: 3.090000sec

user system total real

Benchmark 1 2.880000 0.150000 3.030000 ( 3.825437)

So, on my macbook air, we wrote 1000 records in 10.5437 seconds, and read 1000 records in 3.8254 seconds with acts-as-taggable-on

Now, lets implement the example using postgres arrays, and see where we land

rails g model ArticlePa title:string body:text tags:string

Edit the new migration as follows

class CreateArticlePas < ActiveRecord::Migration

def change

create_table :article_pas do |t|

t.string :title

t.text :body

t.string :tags, array: true, default: []

t.timestamps

end

end

end

rake db:migrate

update our benchmarking code:

require 'benchmark'

namespace :bench do

task writes: :environment do

Benchmark.bmbm do |x|

x.report("Using Taggable") do

1_000.times do

ArticleTaggable.create(:title => ('a'..'z').to_a.shuffle[0,8].join, :body => ('a'..'z').to_a.shuffle[0,100].join, :tag_list => ['TAG1'])

end

end

x.report("Using Postgres Arrays") do

1_000.times do

ArticlePa.create(:title => ('a'..'z').to_a.shuffle[0,8].join, :body => ('a'..'z').to_a.shuffle[0,100].join, :tags => ['TAG1'])

end

end

end

end

task reads: :environment do

Benchmark.bmbm do |x|

x.report("Using Taggable") do

1_000.times do

ArticleTaggable.includes(:tags).find_by_id(Random.new.rand(1000..2000));

end

end

x.report("Using Postgres Arrays") do

1_000.times do

ArticlePa.find_by_id(Random.new.rand(1000..2000));

end

end

end

end

end

rake db:reset

rake bench:writes

rake bench:reads

The Results

➜ rails-tag-bench rake bench:writes

Rehearsal ---------------------------------------------------------

Using Taggable 8.520000 0.330000 8.850000 ( 10.532700)

Using Postgres Arrays 1.460000 0.110000 1.570000 ( 2.082705)

----------------------------------------------- total: 10.420000sec

user system total real

Using Taggable 8.340000 0.310000 8.650000 ( 10.221277)

Using Postgres Arrays 1.410000 0.110000 1.520000 ( 2.012559)

➜ rails-tag-bench rake bench:reads

Rehearsal ---------------------------------------------------------

Using Taggable 2.920000 0.160000 3.080000 ( 3.898911)

Using Postgres Arrays 0.420000 0.060000 0.480000 ( 0.700684)

------------------------------------------------ total: 3.560000sec

user system total real

Using Taggable 2.870000 0.140000 3.010000 ( 3.805598)

Using Postgres Arrays 0.400000 0.060000 0.460000 ( 0.677917)

For my money, the postgres arrays appear to be much faster, which comes as little surprise. By cutting out all the additional joins, we greatly reduce the query time.

However, it is important to note that this isn’t an apples-to-apples comparison. Acts-As-Taggable-On provides a lot of functionality that simple arrays do not provide. More importantly, this locks you into the postgres database, which may or may not be a problem for you. However, if you really have simplistic tag needs, the performance improvements might be worth it.